Discover how data extraction costs are determined and how Grepsr simplifies pricing by focusing on delivering only the data you need. This guide equips you to confidently budget your data project with precision.

We once had a prospect submit a data extraction request to our friendly chatbot, Dextor.

The prospect—let’s call him John—asked to speak with a sales agent. I was overjoyed, thinking this could be a new deal in the making.

Dextor, being proactive, asked John to describe his use case.

Alas, John said he had recently bought a Samsung Galaxy S10, and for some reason, it wouldn’t turn on. He wanted to know if we could extract photos from it, and asked for a quote!

After this message, my hopes quickly subsided, but soon enough, the loss of a ‘real’ use case turned into one of those funny office moments.

We joked that the tech team would go crazy if we put it on record that the use case was lost for ‘technical reasons.’

Jokes aside, this highlighted a key challenge in web scraping: quoting a price is rarely straightforward.

Web scraping can be applied to countless use cases. These range from pricing data to competitor analysis. Costs depend on the complexity of the project. This includes factors like data volume, extraction frequency, and any technical obstacles.

Setting the Record Straight

Before discussing how we price a data extraction project, it’s important to first address a few essential fundamentals.

Web scraping is the process of automating the collection of web data. This data can range from product prices on e-commerce sites to information buried in PDF documents.

To accomplish this, we first set up a crawler that scans the web and identifies the data points to collect. Once the crawler has mapped the data, a scraper is deployed to extract it.

It’s important to note that the crawler setup must operate within a virtual environment, which also requires a robust data management platform to handle and store the extracted data.

This is particularly crucial for enterprise-level projects, where we often deploy hundreds of crawlers at regular intervals to ensure continuous data collection.

After the data is gathered, it needs to be accessible and ready for deployment across various systems.

At Grepsr, we specialize in managed data extraction services, particularly for large-scale projects. We handle everything from the initial setup of crawlers to the management and delivery of data to our clients.

Before moving forward, one key point: collecting personal data is a strict no-go.

Ethical data extraction is essential, and it’s important to ensure that scraping practices don’t overload the server or disrupt the site’s normal operations.

By managing the frequency of requests and staying mindful of server capacity, we make sure that our data extraction remains respectful and responsible.

Privacy and data protection are top priorities.

So, sorry John, we can’t help with extracting photos from your Samsung Galaxy S10. Not only does it fall outside our technical expertise, but more importantly, your data is personal, and for ethical reasons, we cannot extract it.

Data Extraction Costs: What Grepsr Delivers and What It Doesn’t

Let’s look at all the options you have for data extraction before we narrow down to where Grepsr fits in, and in what cases we can help you out.

1. Self Serve & Platform-Based

If you choose to build a custom solution for web data extraction, this option allows you greater control over the process.

However, it requires a solid understanding of programming and significant experience in coding your own scraper.

While building a custom scraper provides flexibility, it also comes with inevitable challenges.

You will likely encounter various obstacles, especially as websites continuously evolve their structure and implement anti-bot measures.

Without proper handling, these challenges can lead to time-consuming debugging and frustration.

A common approach involves using frameworks like BeautifulSoup, often in combination with additional libraries like Scrapy or Selenium, depending on the complexity of your use case.

As your project scales, you’ll also need to implement strategies for overcoming anti-bot measures such as CAPTCHA, rate limiting, or dynamic content loading.

In our experience, these countermeasures represent a significant portion of the total cost and complexity of building and maintaining scrapers.

Additionally, beyond the scraper, you’ll need backend infrastructure to handle storage, data pipelines, and the virtual environment required to run the scrapers at scale.

All of this demands not just technical expertise but also the ability to manage and scale these systems over time.

This method is best suited for teams that already have seasoned web scraping professionals. Costs for this approach can range from a few hundred dollars to several thousand dollars per month, not including the added expenses of bypassing advanced anti-bot protections.

In a nutshell: Building a custom web data extraction solution provides control and flexibility. However, it requires strong technical skills and can be time-consuming, especially with challenges like anti-bot measures and scaling infrastructure. Costs can increase quickly, making this approach best suited for teams with experienced web scraping professionals.

2. Self Serve & Tech-Constrained

While the first method is designed for those with extensive coding skills, this option caters to individuals without a strong technical background.

These off-the-shelf tools enable users to extract data by leveraging features such as Macros, XPath, CSS selectors, and more.

Typically, platforms in this category offer a simple and intuitive Graphical User Interface (GUI), allowing users to visually select the data they need and automate the extraction process.

However, there are limitations to this approach. These tools often struggle with semi-structured data, pages that rely heavily on JavaScript (JS), or websites employing advanced anti-bot measures.

While they are user friendly, their functionality may not be sufficient for more complex or large-scale data extraction projects.

We recently launched Pline, a straightforward and intuitive data extraction tool that falls under this category.

Pline is designed to make data collection accessible to non-technical users.

With Pline, you can collect up to 1,000 records per month free of charge. For those with larger data needs, there are three paid plans available, each offering more capacity and features.

Additionally, special discounts are currently available since Pline is a fresh release.

You can view our pricing options below:

In a nutshell: Off-the-shelf data extraction tools are designed for non-technical users. They provide a simple interface and use features like XPath and CSS selectors for automation. However, they often struggle with complex websites using JavaScript or anti-bot measures. These tools may also not be suitable for large-scale projects. Pline, our new release, offers a free plan with paid options for advanced features.

3. Managed Service & Tech-Constrained

Now, for the third option – though it’s not exactly our approach to data extraction.

The ‘Managed Service & Tech-Constrained’ route typically involves ad-hoc development through either an in-house IT team or contractors from platforms like Upwork or Fiverr.

These are custom-built solutions tailored to very specific use cases. Say you need to collect news data from multiple sources.

Your goal might be to monitor news publications that mention specific keywords like ‘climate change’, or related terms like ‘green energy’ or ‘climate conscious’.

In this case, you might hire a freelancer from Upwork to build a solution for you.

So far, so good.

However, here’s the key issue with one-off crawlers: once you build a crawler to extract data for a one-time project, it becomes redundant after use.

This means all the resources invested in building that crawler goes to waste if it’s not reused.

As a result, freelancers or contractors may charge a premium for such one-off projects, as they’ll need to account for the limited lifespan of the crawler.

At Grepsr, we’ve focused on data extraction for over 12 years. During this time, we’ve developed a proprietary web scraping framework. This framework helps us quickly identify where your data is and the best way to extract it.

Our experience gives us a clear advantage, allowing us to act efficiently. Chances are, we already have a ready-to-go crawler that fits your data extraction needs. This allows for fast, seamless implementation with minimal setup required.

And even if we don’t have a solution readily available, our experience kicks in – the more than 10,000 hours of practice of our web scraping veterans.

We have the capability to swiftly tailor and deploy a customized solution to meet your specific needs.

This factor alone significantly lowers the cost per project, as you’re not incurring the expense of building a new crawler from scratch.

More on this in the next section.

In a nutshell: The ‘Managed & Tech-Constrained’ option involves hiring freelancers for custom data extraction. However, one-off crawlers become redundant after use, wasting resources and increasing costs. Established providers like Grepsr reduce costs by using pre-built or easily customizable solutions.

4. Managed Service & Platform-Based

Now, the ‘Managed Service & Platform-Based’ option is where we truly shine. At Grepsr, we offer a fully managed, plug-and-play service. This eliminates the need for customers to deal with technical complexities.

You won’t have to worry about setting up crawlers, solving CAPTCHAs, or managing IP rotations.

Our managed data acquisition service is centered around our Data Management Platform.

We don’t just build the crawlers and scrapers to extract data. You also get access to the platform. On this platform, you can monitor the performance of your data extraction workflows. And the best part? You can do it in real-time.

This platform allows you to run crawlers at your convenience, at the frequency you choose, and even add team members to collaborate on projects.

Beyond that, it seamlessly integrates the web data directly into your system, ready for downstream analysis, powering everything from dashboards to Business Intelligence (BI) platforms.

If that’s not enough, you can also access and manage various datasets within the platform for easy consumption and use.

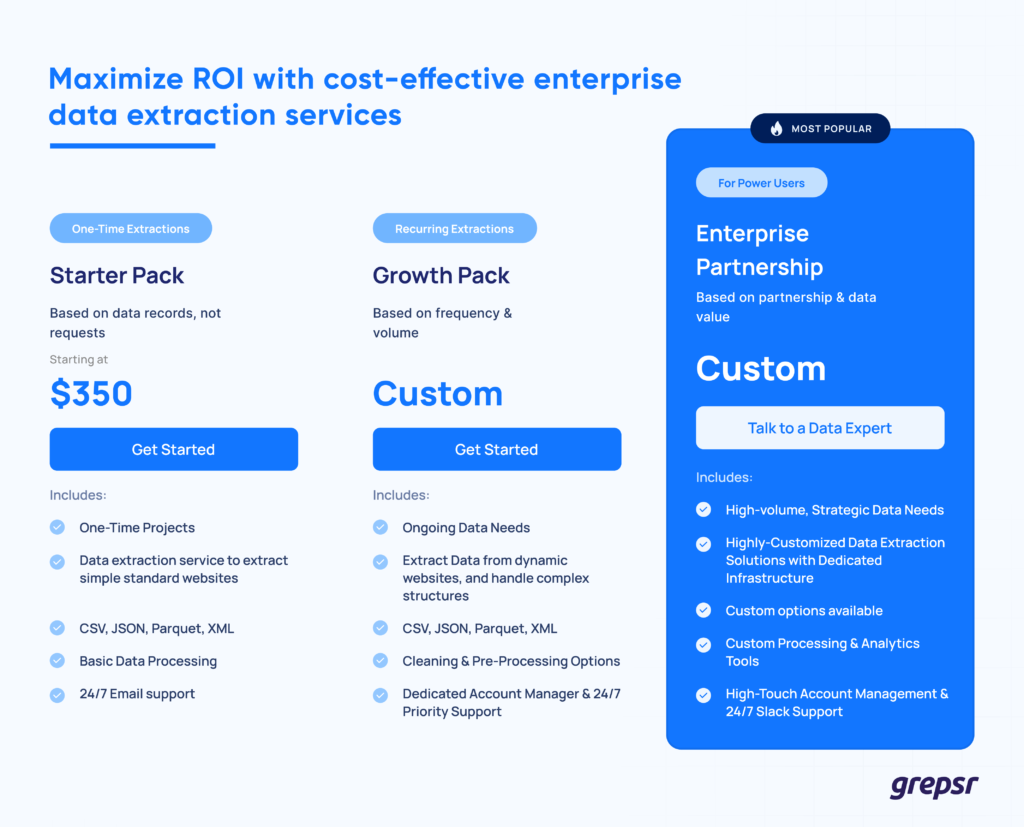

The key advantage of this approach? You pay for one thing only—data. Unlike other vendors who layer their pricing with charges for IP rotation, CAPTCHA-solving, and, worst of all, per request, we keep things simple.

You’re not billed for each “knock” on a website. Instead, we charge based on the records delivered.

Without careful consideration, you could end up feeling like Russell Crowe in A Beautiful Mind, caught in a maze of complex pricing models from other vendors.

At Grepsr, we simplify access to data, making it straightforward and cost-effective.

Once you subscribe, you’ll have access to the exact web data you need at the scale you desire. We grow with our clients—starting with small, one-time projects and expanding as their data needs evolve.

This pricing model has helped us build long-term trust and partnerships with our clients, supporting their growth as we scale alongside them.

In a nutshell: Grepsr’s ‘Managed Service & Platform-Based’ option takes care of technical tasks like crawler setup and CAPTCHA-solving, letting you focus on the data. With a real-time platform, you can monitor, schedule, and manage extractions effortlessly. Pricing is simple – you only pay for the data delivered. This makes it both cost-effective and scalable as your data needs grow.

Simplifying Your Data Extraction Cost for Smarter Budgeting

In the world of data extraction, not every request is as straightforward as John’s Samsung Galaxy dilemma.

While we couldn’t assist him in retrieving his lost photos, we’re ready and fully equipped to serve Malcolm, Mary, Shelly, and even a different John – if he needs web data.

All the way from price tracking to news monitoring, and everything in between.

At Grepsr, we’ve spent over a decade simplifying access to web data. We offer a managed service that eliminates technical headaches. And we make sure you pay only for the data you need.

Whether you’re looking to start small or scale big, we’ve got the infrastructure, expertise, and platform to grow with you.

We’re here to deliver the data you need – quickly, ethically, and at a cost that makes sense.